Study design and participants

We conducted a two-arm, parallel-group, randomised, adaptive Bayesian optimisation trial of a remotely delivered digital intervention. Ethical approval for the trial was granted by the Wales Research Ethics Committee (Wales REC 6, 21/WA/0173). The trial was registered prospectively at Clinical.Trials.gov (CTR: NCT04992390). The study protocol was added to a public depository (osf.io/2xn5m), and the trial had a data monitoring committee (DMC).

The study was advertised via email and Twitter directly from the Intensive Care Society to its membership network, mailing list, and existing social media followers, supplemented by advertisements through Facebook. Advertisements contained a link to the study website: which included a summary of study information, a video explaining IMs, and a participant information sheet.

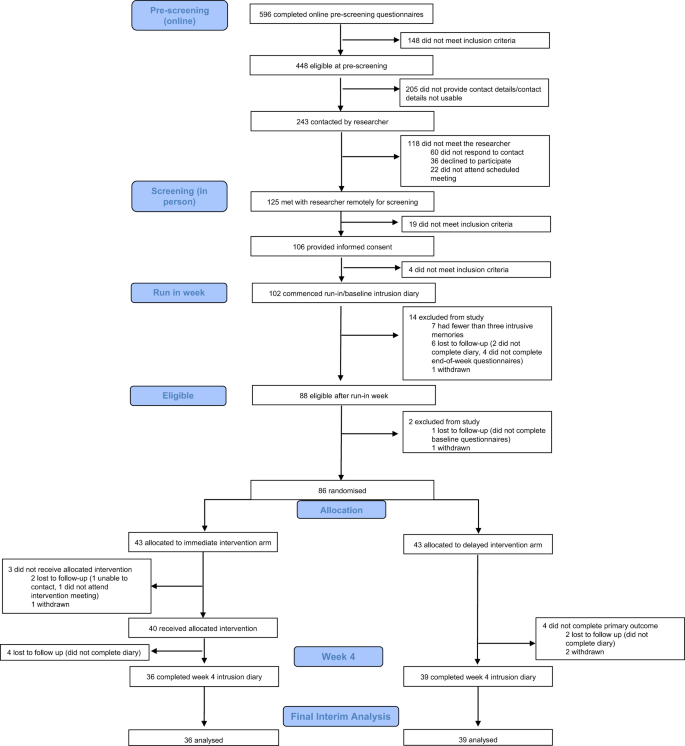

Eligible participants were adults aged 18 years or older, who worked in a clinical role in an NHS ICU or equivalent during the COVID-19 pandemic (e.g. as a member of ICU staff or deployed to work in the ICU during the pandemic), who had experienced at least one traumatic event related to their work (meeting criterion A of the DSM-5 criteria for PTSD: “exposure to actual or threatened death, serious injury, or sexual violence” by “directly experiencing the traumatic event(s)” or “witnessing, in person, the event(s) as it occurred to others”), had IMs of the traumatic event(s), and had experienced at least three IMs in the week prior to screening. Further, participants had internet access; were willing and able to be contacted by the research team during the study period; were able to read, write, and speak English; and were willing and able to provide informed consent and complete study procedures. Exclusion criteria were having fewer than three IMs during the baseline week after informed consent (i.e. the run-in week on Fig. 1). We did not exclude those undergoing other treatments for PTSD or its symptoms, so that the study was as inclusive as possible to meet the challenges that ICU staff were facing during the COVID-19 pandemic. Written informed consent was obtained before participation (using an electronic signature via email).

CONSORT diagram showing enrolment, allocation, and the analysis populations.

Randomisation and masking

Participants were randomly assigned (1:1) using a remote, secure web-based clinical research system (P1vital® ePRO) to either immediate intervention arm (immediate access to the brief digital imagery-competing task intervention plus symptom monitoring for 4 weeks) or delayed intervention arm (usual care for 4 weeks followed by access to the intervention plus symptom monitoring for 4 weeks).

Randomisation occurred following the baseline week, and after baseline questionnaires (see protocol; osf.io/2xn5m). The allocation sequence was computer-generated consecutively using minimisation. Minimisation allocated the first participant to either arm, thereafter allocation was preferential to the arm with the fewest participants to minimise the difference in group sizes. The randomisation allocation percentage was originally set to 66% and altered to 85% after 61 participants were randomised (to ensure balance of groups after early stopping decision, see “Results”). Overall, 86 participants were randomly assigned to the delayed (n = 43) or immediate (n = 43) arm.

Participants were blinded to group allocation. The statistician who conducted the interim analyses (VR), and researchers who contacted participants and facilitated the conduct of the task intervention were not blinded to group allocation. A researcher supported the technical use of the intervention in the first guided session in i-spero® and was aware that the participant completed a cognitive task. It is noted that all outcome data (including the primary outcome 4 weeks later) was self-reported by participants independently and directly into the online platform (P1vital® ePRO) removing the need for researcher input and reducing the risk of bias. That is, outcome assessments were masked to group allocation since they were self-reported by participants in the digital platform. Scoring of data was automatic in P1vital® ePRO, and thus independent from the researchers guiding the intervention session.

The intervention

Straight after randomisation, participants in the immediate intervention arm gained access to the digital imagery-competing task intervention with symptom monitoring of IMs for 4 weeks. The intervention was delivered on a secure web platform (i-spero®) via smartphone, tablet, or computer. Participants had an initial researcher-guided session (~1 h, via Microsoft Teams) and thereafter used i-spero® in a self-directed manner (~25 min; with the option for support). The researcher-guided session consisted of step-by-step instructions, animated videos and multiple-choice questions. Participants were instructed to list their different IMs by typing a brief description. They selected one IM from their list, and very briefly brought the image to mind. After instructions on playing the computer game Tetris® using mental rotation, they played for 20 min. Finally, they were instructed on monitoring IMs in i-spero® and encouraged to use the intervention to target each memory on their list.

The brief digital intervention on i-spero® and P1vital® ePRO are owned and manufactured by P1vital Products Ltd. Tetris® has been licensed for use within i-spero® from The Tetris Company. P1vital® ePRO, i-spero® and the brief digital intervention have been developed following a formal computerised system validation methodology which complies with Good Clinical Practice, FDA 21CFR Part 11 and ISO13485 Quality Management System.

Assessments

Baseline

After informed consent, participants completed a daily IM diary online for 7 days (baseline week, day 0–6 i.e. run-in week on Fig.1) to record the number of IMs of traumatic events. This diary was adapted from previous studies [8, 20] for digital delivery using P1vital® ePRO. Participants were asked “Have you had any intrusive memories today?” and if answered “yes” selected how many, prompted by email/SMS once daily. Those who reported three or more IMs during baseline week and completed baseline questionnaires (sent after baseline week to those meeting the study entry criteria, see Fig. 1 and Supplementary Table 1) were randomised. The total number of IMs of traumatic event(s) recorded during the baseline week is used as a baseline covariate when modelling.

Primary outcome

During week 4, participants in both arms were asked to again complete the daily IM diary for 7 days (i.e. from day 22 to 28, where day 1 is the guided session in immediate arm/equivalent timeframe in delayed arm) to record the number of IMs of traumatic event(s); The primary outcome measure was the total number of IMs recorded by participants in this daily IM diary in week 4.

The outcome measure was derived from diaries used in clinical practice [31], laboratory [20] and patient studies [8]. Positive relationships between diary IMs and the Impact of Events Intrusion subscale indicates convergent validity with PTSD symptoms [32]. Count data provides greater sensitivity than questionnaires with finite categories, with no upper bound. Daily completion can reduce retrospective recall biases of completing measures later. Service users report the diary is straightforward with typically good adherence and limited missing data [32]. Remote and digital completion of the diary here meant it was assessor blinded.

The clinical meaning of a change in score may depend on the trauma population, as single-event trauma incurs fewer IMs than repeated trauma. For a PTSD diagnosis, the Clinician-Administered PTSD Scale for DSM–5 (CAPS-5) [33] requires at least two IMs over the past month. The CAPS-5 maximum score is “daily”, and reducing this to “once-or-twice a week”/“never” (CAPS-5 mild-minimum) represents a clinically meaningful outcome target [34]. The CAPS-5 was not administered in the current study due to considerations around participant burden of adding a clinician interview. However, as it is a gold standard measurement in the field, we here note its scoring scale range to shed light regarding the number of IMs typically assessed clinically.

Safety

Adverse events were monitored through a standardised question (“have you experienced any untoward medical occurrences or other problems?”) at week 4 and week 8, as well as through any spontaneous reports from participants at any time point during the study.

Other outcomes

The present article focuses solely on the sequential Bayesian analyses on the primary outcome measure. A standard analysis (using frequentist statistics) of the final study population including secondary outcome measures will be reported elsewhere [35] (CTR: NCT04992390).

Statistical analysis

Informed by power estimates based on an effect size of d = 0.63 (based on pooled information from three previous related RCTs [8, 16, 17]) for the primary outcome, we planned to recruit up to 150 participants, with the potential to end recruitment earlier based on interim analyses. Therefore, we employed a sequential Bayesian design with maximal sample size [30, 36]. This allowed for interim analyses to guide decision-making, such as when to adjust aspects of the intervention to optimise its effect, and when sufficient evidence has been collected to end the optimisation trial, and proceed to a follow-up pragmatic RCT to test the clinical effectiveness of the optimised intervention.

The fundamental idea to a Bayesian approach is simple [37]: the parameters that we are trying to estimate are treated as random variables with distributions that represent our initial beliefs and uncertainty. After observing data, initial beliefs can be updated with the new information to get improved beliefs. This contrasts with the prevailing “frequentist” statistical frameworks where these parameters are fixed, and probabilities are seen as long-run frequencies generated by some unknown process. The principal outcome of fitting a Bayesian model is the posterior distribution: a probability distribution that indicates how probable particular parameter values are, given the prior distribution (representing initial beliefs) and the observed data. In a Bayesian model, the 95% credibility interval states that there is 95% chance that the true population value falls within this interval.

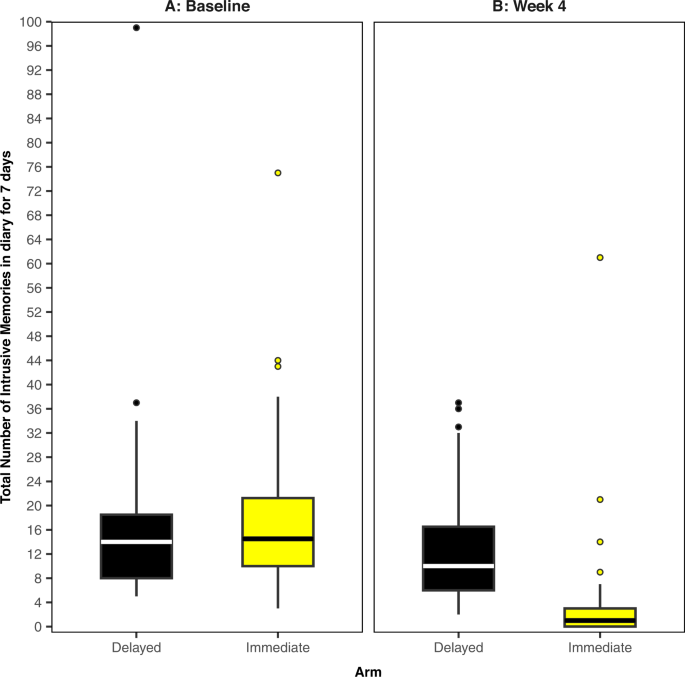

All analyses were completed in R (version 4.1.2) on an intention-to-treat basis. We fitted a Bayesian model, where the primary outcome (intrusive memory count) was modelled using a Poisson linear mixed model. The baseline number of IMs, and treatment assignment were fitted as fixed effects with a random intercept effect for participant. As daily IM diary data used to calculate the primary outcome was collected sequentially over time (baseline or week 4), we used time series methods and an expectation-maximisation algorithm [38] to impute missing values. We present the median and interquartile range (IQR) to account for outliers and skewed primary outcome data (Fig. 2 and Supplementary Fig. 1); other summary statistics such as the mean and standard deviation (SD) can be found in Supplementary Table 2. The posterior mean of the treatment assignment parameter and the associated 95% credible interval will also be presented. The Supplementary Information provides full details regarding software, model assumptions, priors, missing data, model fit (Supplementary Figs. 2 and 3 and Supplementary Table 3), and sensitivity analyses (Supplementary Figs. 4–7).

The midline of the boxplot is the median value, with the upper and lower limits of the box being the third and first quartile (75th and 25th percentile), and the whiskers covering 1.5 times the IQR. The dots depict outliers (each dot represents one participant that departed more than 1.5 times the IQR above the third quartile and below the first quartile). All outliers are included in this figure. A Baseline measure for each arm. Total number of IMs of traumatic events recorded by participants in a brief daily online intrusive memory diary for 7 days during the baseline week (i.e. run-in week) for both arms (black = delayed arm (control); n = 39: usual care for 4 weeks; yellow = immediate arm; n = 36: immediate access to the intervention following the baseline week: the intervention consisted of a cognitive task involving a trauma reminder-cue plus Tetris® computer gameplay using mental rotation plus symptom monitoring), showing that the two arms did not differ at baseline (i.e., before the intervention was provided to the immediate arm). B The primary outcome measure for each arm. Total number of IMs of traumatic events recorded by participants in a brief daily online intrusive memory diary for 7 days during week 4 for each arm (black = delayed arm (control); n = 39: usual care for 4 weeks; yellow = immediate arm; n = 36: immediate access to the intervention following the baseline week: the intervention consisted of a cognitive task involving a trauma reminder-cue plus Tetris® computer gameplay using mental rotation plus symptom monitoring), showing that the immediate arm had fewer IMs at week 4 compared to the delayed arm, and that the number of IMs for the immediate arm decreased between the baseline week and week 4.

In Bayesian hypothesis testing, a metric known as a Bayes Factor (BF) [37] provides a continuous measure quantifying how well a hypothesis predicts the data relative to a competing hypothesis. Conventional significance tests using p values do not provide any information about the alternative hypothesis. Theory shows that if the Bayes Factor (BF10) equals 5, this indicates that the data are five times more likely under Hypothesis 1 (H1) than under Hypothesis 0 (H0). This means that H1 provides better probabilistic prediction for the observed data than does H0 [36]. The Bayesian framework requires the explicit specification of (at least) two models to compare, whereas the frequentist framework relies on only one [39]. Clinical researchers are often interested in several questions when developing a treatment: does the treatment work better, worse, or no differently than an existing placebo or active control? This approach to model comparison through a Bayesian framework allows for a more direct way of answering such questions.

In contrast to p values, BFs retain their meaning in situations where data are provided over time, regardless of any sampling decisions, therefore data can be analysed repeatedly as it becomes available, without needing special corrections (see Schönbrodt et al. [30]). Therefore, the Bayesian approach allows interim analyses to guide decision-making during RCTs, making them attractive when using adaptive trial designs [40, 41].

For this study, BFs were computed repeatedly during interim analyses, starting with 20 participants and approximately every 4–10 participants thereafter, up to a maximum of 150. Early stopping of the trial for either sufficient evidence of negative or positive effect was considered if the respective BFs exceeded a pre-defined threshold of 20 which would suggest strong evidence [37]. This sequential BF stopping rule is a suggestion, not a prescription [30]; in Bayesian analyses we are able to sample until hypotheses have been convincingly proven/disproven, or until resources run out [30, 42].

We first calculated a BF for a negative effect of the intervention (i.e. whether participants in the immediate arm had a greater number of IMs at week 4 than the delayed arm). If this BF exceeded 20, we concluded that there was strong evidence for a negative effect of the intervention and the trial may need to be altered or stopped. We then calculated a BF for positive treatment effect (i.e. whether those in the immediate arm had fewer IMs at week 4 than the delayed arm, as opposed to having no difference). If this BF exceeded 20, we concluded that there was strong evidence for the effectiveness of the intervention and consideration could be given to stopping the trial early (Supplementary Information).

Originally, we planned to explore potential “mechanistic” optimisations to improve the effectiveness of the intervention (e.g., time playing Tetris®). Given the rapid accumulation of evidence in favour of a positive treatment effect (see “Results”), we focused on practical “usability enhancements” to aid smooth digital delivery and user experience (Supplementary Information): this included repeating the intrusive memory visualisation step, adding a summary instruction video, and adding a graphical representation of daily IMs for the 4 weeks. An optimisation enhancement round was conducted on Feb 7, 2022, after 55 participants had been randomised. When testing for the effect of these enhancements, sample size analyses were first conducted to estimate the number of participants needed to test for a positive treatment effect, and to compare pre-and post-optimisation groups (Supplementary Figs. 8 and 9).

link

More Stories

‘They’re selling everything as trauma’: how our emotional pain became a product | Mental health

Reflections on mental health research in post-disaster settings

Post-Traumatic Stress Disorder Treatment Market to Reach $26.1